Minimizing experimentation impact on SEO

In mid-2021 Google made a change to their core algorithm1 that resulted in them incorporating user experience signals, called Core Web Vitals, into their ranking algorithm. Specifically, sites that load quickly, are visually stable, and respond quickly to user input are more likely to rank higher than competing sites that aren't.

As of March 2023, Google is measuring three metrics for each site and using them to change rankings: Largest Contentful Paint (LCP), Cumulative Layout Shift (CLS), and First Input Delay (FID). Google takes measurements via anonymized tracking embedded in their Chrome browser running on mobile and desktop devices. Their measurements are based on aggregated data from real users who visit your site, not just from Google's automated crawlers.

Warning

Google computes a 28-day moving average for each of these metrics, so if you have a bad deployment that tanks one of these metrics for a day, it can have a negative impact on rankings for a while!

Without diving deeply into the technical details, you can think of the three metrics like this:

| Metric | Description |

|---|---|

| Largest Contentful Paint (LCP) | Did the page load quickly for the user? Specifically, did the large hero or title at the top of the page load quickly? |

| Cumulative Layout Shift (CLS) | Did elements of the page move around a lot after it initially loaded? Or did everything pretty much stay where it was? |

| First Input Delay (FID) | Could the user click, scroll, and otherwise interact with your site quickly? |

Since Google is collecting this data from real users who are using your site, your A/B tests and other experiments can easily end up having a negative impact on these metrics. In my experience, it's particularly common for experiments to cause negative impacts on CLS and LCP.

Even when you aren't actively running any experiments on a page some experimentation tools can negatively impact these metrics due to the way they are designed. For example: Optimizely Web will prevent all pages from loading until it has made a request to Optimizely's servers to fetch experiment configurations, which has a negative impact on LCP.

In order to minimize the negative impact of your experiments on Core Web Vitals, consider doing experiment variation assignment and experience modification server-side or at the edge instead of client-side.

No matter what you do, you can use the free tool Web Page Test to measure the impact of your changes on your Core Web Vitals in a staging environment before deploying to production.

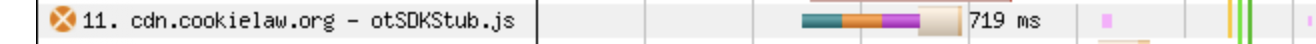

Watch out for resources like this:

Redirect Testing¶

In some cases growth teams actually just want to build two versions of a page that are published at different URLs.

If you are going to do this, I strongly recommend you review Google's documentation on the subject before you start: Minimize A/B testing impact in Google Search

-

Google Search Central Blog, More time, tools, and details on the page experience update, Retrieved 2023-03-30 ↩